By Edrine Wanyama |

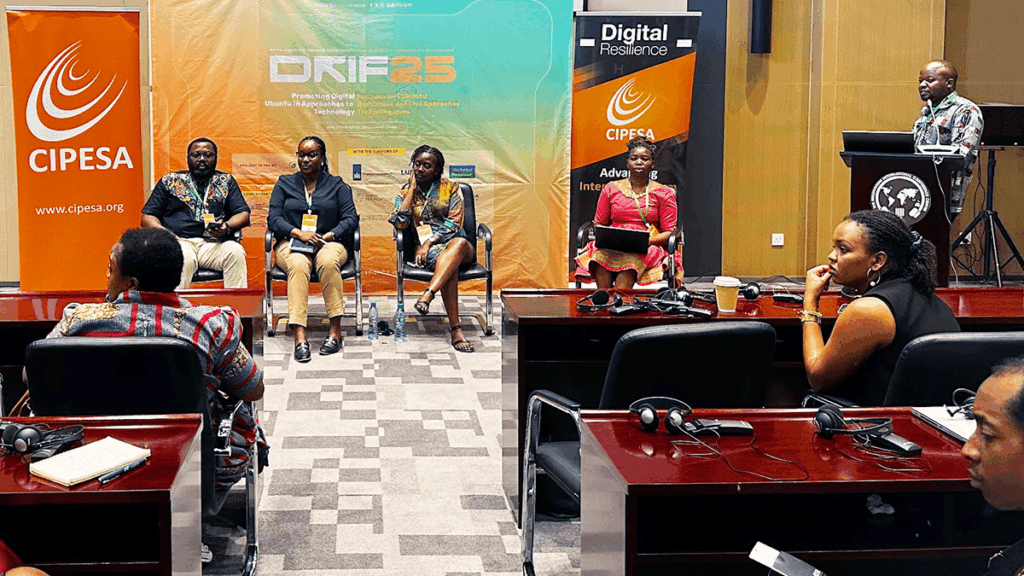

At the 2025 Digital Rights and Inclusion Forum (DRIF) recently held in Lusaka, Zambia, the Collaboration on International ICT Policy for East and Southern Africa (CIPESA) hosted a session examining content moderation and tech accountability from a global South perspective.

While recognising the role of platforms in shaping public discourse, the session also noted their role in spreading disinformation and amplifying hate speech. In this regard, panelists raised critical concerns around transparency, bias in algorithms, and inadequate reflection of local languages and contexts, emphasising the obligations of tech companies, the rights of users, and the responsibility of governments and civil society in ensuring accountability while safeguarding free expression online.

Gaps in content moderation by platforms have often been the basis for governments to develop overly broad and regressive laws, expand influence over social media, and to weaponise some of the emerging information disorder threats like hate speech and disinformation.

In January 2025, Meta announced the termination of independent fact-checkers in the United States of America and replaced them with user-driven “community notes”. Although the announcement does not mention Africa, its impact is bound to reach the continent. Meta’s decision is especially troubling for Africa, given its vast linguistic and cultural diversity, low levels of digital and media literacy, rising challenges such as hate speech and election-related disinformation, the absence of context-specific content moderation policies, and insufficient investment in local fact-checking efforts.

According to Hlengiwe Dube, a Program Manager: Expression, Information and Digital Rights Unit at the Centre for Human Rights – University of Pretoria, “The data on content moderation in African countries reveals that content moderation practices often fail to consider local contexts, languages, and the socio-political environment. There is a growing call for reforms that prioritise local expertise, linguistic diversity, and human rights in the development and implementation of content moderation policies.”

Commenting on the use of Artificial Intelligence (AI), algorithmic transparency and accountability in automated moderation systems, Dube further noted that “When AI-driven content moderation only partially considers Africa’s languages, contexts, and conflicts, it risks doing more harm than good. True tech accountability requires systems co-created with local expertise and grounded in human rights, transparency, and justice.”

Patricia Ainembabzi, Policy and Advocacy Officer at CIPESA, reiterated the leverage the continent has in the tech ecosystem, “Africa remains a key market for global tech companies. This influence must be used to demand alignment with regional frameworks like the recently adopted African Commission’s Resolution 630 (LXXXII) 2025 on developing guidelines to assist States monitor technology companies in respect of their duty to maintain information integrity through independent fact checking, which calls for stronger oversight and accountability of platforms.”

This call was also emphasised by Angella Minayo, Programs Officer on Digital Rights and Policy at ARTICLE 19 Eastern Africa, who stressed the need for robust frameworks on business and human rights obligations for tech companies, adding that, “these frameworks will clarify the corporate human rights obligations such as human rights due diligence, human rights risk mitigation and access to remedy for human rights violations.”

Key recommendations that emerged from the session include:

- Governments should develop clear and comprehensive laws and amend or repeal progressive provisions to facilitate protection of rights, promote transparency and accountability and awareness raising programmes on responsible application and use of online content.

- Tech companies need to have more African language content, context-specific risk indicators, and regional experts involved in both dataset curation and annotation for a multilingual and culturally competent AI.

- Tech companies should provide access to more granular, disaggregated data, including information on content removals, appeals, and reinstatements.

- Tech companies should engage in pre-deployment audits of AI moderation systems especially in high-risk markets like politically unstable regions. These should evaluate whether an algorithm poses risks to human rights such as freedom of expression and other civil and political rights, and the rights of minority groups.

- Civil society, academics, and user communities should take active part in co-designing platform policies, testing moderation tools, flagging failures and building capacity of citizens.

- Tech companies should in addition to voluntary commitments develop binding frameworks through regional bodies like the African Union and other international mechanisms. The frameworks should be anchored on the principle of algorithmic fairness and explainability as rights-based obligations.